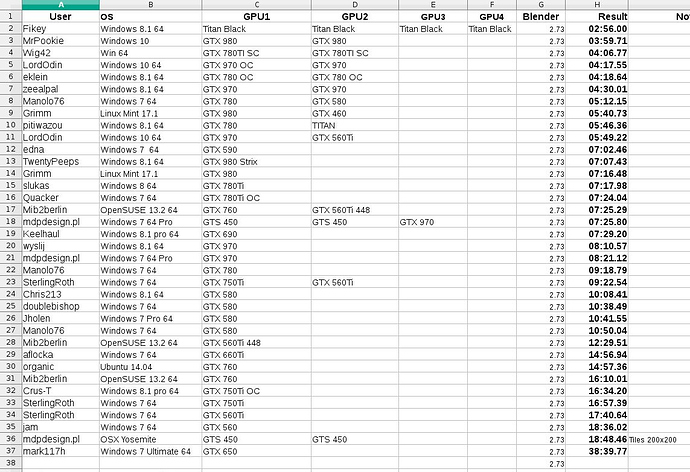

Comes in at 2:56.

Windows 7 x64 gtx 580

10:38.49

OS: Windows 8.1 64bit

GPU: EVGA GTX580 Classified Ultra 3gb

Time: 10:08.41

Can you post the Spreadsheet file when you add updates?

Hi LordOdin, add link to the first post.

When I post new entries the file is also updated.

Cheers, mib

Most likely ![]()

Nope, I turned SLI off. You can’t make such a mistake really because in SLI mode it renders as fast as a Pentium 100! :o

Windows 7 / 64

GTX 590

07:02:46

Good to see the old 590 holding its own against the big boys (even the 690, somehow). Sounds like a hairdryer, mind you.

Hi, the fun with the GTX 500`s is over when 2.74 comes with CUDA 7.0, maybe. ![]()

New numbers:

Cheers, mib

FIKEY

Can you please make test with one gpu?

Would like to see titan black comparision to gtx980. single vs single.

OS: Windows 8.1 64bit

GPU: 980M 8gb

Time: 09:14:54

Interestingly system conditions seem to influence the render time, there is some flux in doing several tries (which of course is only feasible of you do not have 30min+ render times…)

OS: Win8.1, i7-3770k, 32GB RAM

2x780 6GB Strix (Asus - 941MHz GPU; the name says OC edition, but I am not sure if overclocking is built in or has to be turned on via GPU tweak tool - I did not touch the factory settings)

Autotile size (via Add-On):

(with activated SLI)

05.02.03

(without activated SLI)

05:02.37

256x256 tile size

(with activated SLI)

05:13.84

(without activated SLI)

05:14.23

What I do not understand is why SLI turned off/on seems to make no difference to me (the differences here are clearly due to system conditions). Blender does recognise the 2x780 setting in the user prefs irrespective of the SLI condition to the global applications settings in the nvidia display driver. I expect that this would only influence how blender is using the graphics card for UI display, not the actual GPU rendering. But I guess we have to ask the developers that. To me means that I can leave SLI globally turned on and still render on 2 GPUs separately without a performance drop.

Anyone any comments on the SLI settings thing? Is my assumption correct?

EDIT (another go to look at the flux):

2x780 6GB strix (sli on; UI folded - not command line)

5:16.98

1x 780 6GB strix (single card)

09:23.48

I think you are misunderstanding how SLI works.

In blender the video cards are seen as individual units and with 2 cards it will render two tiles in parallel.

if it were to be a SLI scenario then both cards would render the same tile in parallel, which of course is a waste of time, as both cards would be rendering the same data twice.

So turning sli on and off shouldn’t make any difference to blender rendering. It’s the cuda core setup option in blender which changes how the tiles will be rendered.

Ok, that confirms my assumption, I just was not understanding why people referred to having a drawback from SLI, but they probably used the SLI-combination as “a card” in the blender selection for the GPU device. Some people referred to “turning SLI off for improved performance” - as this is my first SLI set up from today I was confused by that information. But all clear now

Thank you Mr.Pookie.

I’ve done a test with each individual card and my results:

GPU 1 - 08:32.43

GPU 2 - 08:18.94

GPU 3 - 08:15.56

GPU 4 - 08:21.06

4x GPUs - 02:50.40

I’m not sure what the hell is going on with the build times but now it’s down to 2:50 with all 4 GPUs. I’ve been doing re-test after re-test all day and I’m getting all kinds of different results with 2:50 so far being the fastest.

I’m doing some basic research into building my own GPU render farm at some point in the future and I was wondering if someone could explain why the GTX 980, with 4gb ram and 2048 cuda cores, is somehow outperforming a Titan Black that has 6gb ram and 2880 cuda cores? What other factors am I overlooking that would influence these results?

Bear in mind that I’m what you would call a jock walking blind, deaf & dumb in the land of geeks. So explain it to me like I’m a child so I know exactly what to purchase GPU-wise. Cheers!

The Titan (Kepler) and 980 (Maxwell) are 2 different architectures so you cant compare core count or clock speed of one another because they are 2 completely different things.

It would be interesting to test the upcoming (rumoured) 980ti, which is rumoured to run with 2560 Cuda cores!

I know, lots of rumours, but I am also planning a GPU farm in the coming months! ![]()

What has change is that this new scene in this benchmark for 2.73 benefits the Maxwell GPU cuda core architecture quite well. The previous scene in the benchmark for 2.72 the Titan/GTX780 (Kepler) have noticeably better performance than the GTX 970/980 (Maxwell).

Cycles performance has not really change between 2.72 and 2.73. To be fair we would have to try different types of scenes with different complexity to get better comparison in performance.

Overclocking would have a good benefit in performance. If you notice how much higher the base clock for the GTX980 (1126mhz) to the Titan black (889mhz).

Hi, I can add the old 2.72 file here too and all render this file with 2.73 again, new participants render both files.

I rework the list.

I heard about different render times on the same system but nobody knows why, may something with throttle down one or more cards over temperature changes.

Cheers, mib