Hi Cekuhnen,

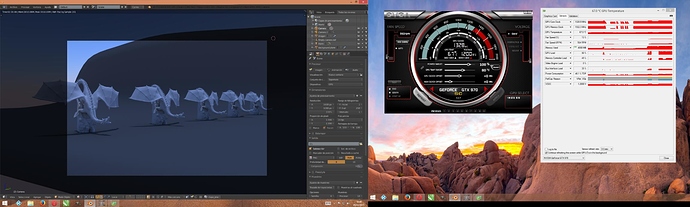

The best render / comparison test I found was using the GTX 970 and a 4GB GTX 760 both of which we purchased recently for some render boxes on exactly the same scene. The 4gb GTX 760 in our experience renders two and a half times faster on any scene where your memory usage is approaching the 3.5 gb level. We purchased a standard 4gb GTX 760, not a ti version. Nvidia have now discontinued the GTX 760 in Australia.

On lighter scenes or scenes where we have just a few models where the scene is under 3.5gb of video memory consumption then the 4gb GTX 970 renders much faster than the 4gb GTX 760, in fact nearly twice as fast. However this situation reverses once you hit 3.5 gb.

So as soon as you build a detailed character, rig it, place it in an environment of any detail etc. it’s quite likely you’ll exceed 3.5 gb memory usage and then the GTX 970 will run slower than the 4gb 760. I’m working on a music video project with characters, character animation and space scenes and we’ve optimised the scenes as much as possibly however nearly all our scenes do tip over the 3.5 gb memory usage.

So in actual render times on for example the scene we had rendering last night - the GTX 760 will render the scene in 3.5 minutes because it has a true 4gb limit and the card performance doesn’t clip, and the GTX 970 will dramatically reduce in performance over 3.5gb and it takes nearly two and half times longer i.e between 8-9 minutes to render the exact same scene.

So 3.5 minutes per frame for a complex scene render on the GTX 760 and 8-9 minutes for the exact same scene and frame on the GTX 970.

So our experience of this is that the more expensive card with more CUDA cores the (4gb GTX 970) takes more than two and half times longer to render the same frame as the 4GB GTX 760 and is a complete dog when it comes to rendering any scene with a moderate level of detail and texture usage in it.

There’s a petition at change.org signed by over 10,000 Nvidia customers highlighting Nvidia’s dishonesty and fraud in the marketing of the GTX 970 as a full 4GB Vram graphics card. It isn’t. Nvidia deliberately downgraded the Vram on the card to compromise it so that the GTX 970 performance wouldn’t match the GTX 980 and their whole campaign on the GTX 970 has been false advertising throughout.

https://www.change.org/p/nvidia-refund-for-gtx-970

Shocking interview with Nvidia Engineer about the 970 fiasco

In terms of how many 4k textures it takes in your scene before this becomes an issue and notwithstanding all the other factors like scene geo, rigs etc, the scenes we have rendered these tests on all involve a 4k scene background, two to three 4k maps on built scene geometry and then about 4-5 4k maps on character and foreground elements. We’ve tried where possible to utilise 2k textures, however when you consider a 3D workflow where you are trying to keep scene geometry low and therefore using normal and displacement maps to achieve high quality looking geo with low poly counts, then when you consider a character that’s detailed where you want to keep the scene geometry low may only be 15 thousand poly’s but may have a 4k displacement map, a 4k normal map, a 4k color map, and possibly a selection of other maps (specular, ao etc) setup at 2k to try and bring down texture usage, then it doesn’t take many image maps on one character alone to see where having a 3.5 gb limitation on what is sold as a 4gb card becomes an issue.

A couple of people on this threads have dismissed this saying “it’s a first world problem, who cares” or “who uses that much Vram anyhow”. Those comments doesn’t address the issue. The point is Nvidia advertised and misled it’s customers to purchase what is supposed 4GB VRAM headroom. This problem affects many many people who do use and need the full 4gb Vram, from gamers to 3D artists. Hence more than 10,000 Nvidia customers now signing the petition.

If anyone considering buying a card for 3D work reads this, you will probably be frustrated with this card when you build a scene of any complexity and it’s definitely better to either opt up for a 980 or find a GTX 760 Ti on ebay. Even the standard GTX 760 4gb version (not GTI) will outperform the GTX 970 by a huge margin on any scene of any complexity. i.e If you are serious about 3d work and need a card that renders well, avoid the GTX 970.

see u around!

see u around!