And what’s your point? We and the developers know it’s not a new concept, the point with the Oculus is that it’s the first serious attempt at making it a consumer product. Low latency, high field of view, fast displays, some big names behind and a reasonable price.

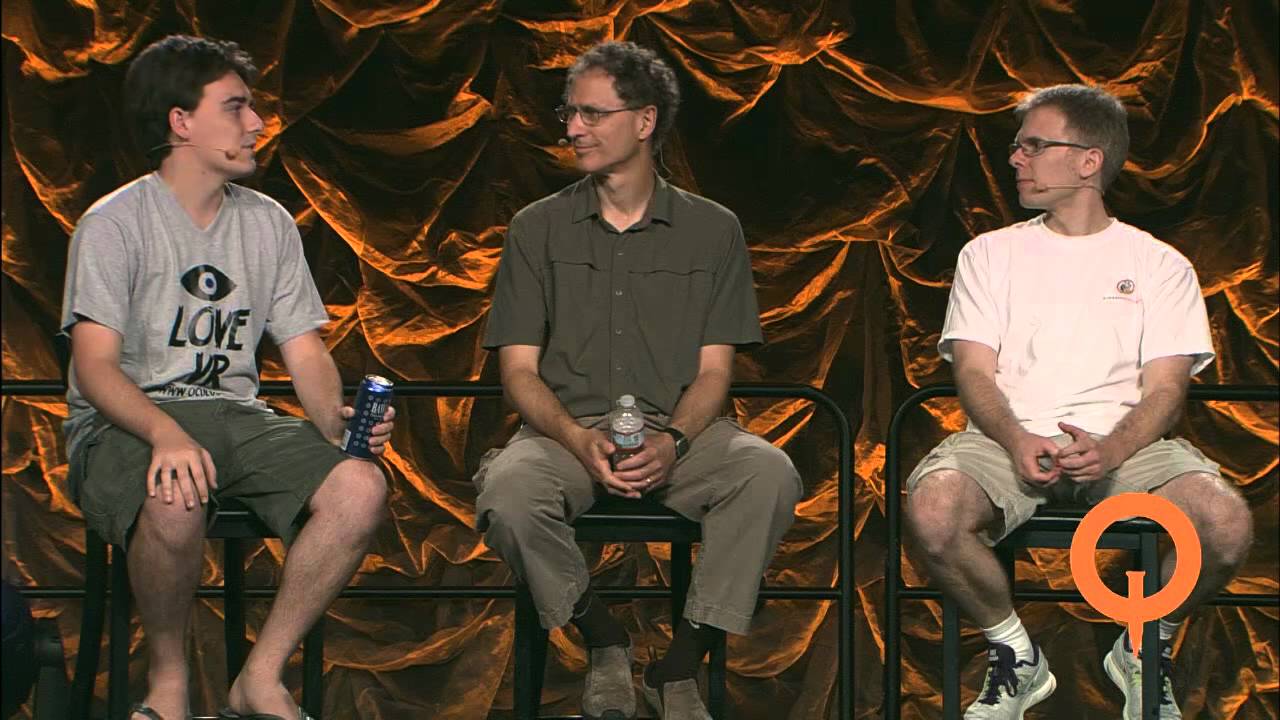

It’s not really a consumer product. Carmack said as much in his quakecon keynote (if you bothered to listen through all 3 hours of it). It’s a neat experimental thingy aimed at developers that is likely to make you seasick and is supported by just one game and an sdk so far. Much like discrete 3d accelerators were in the beginning not all that useful or practical. I’d wait one or two generations before getting one.

wonder how many Epileptic seizures its going to cause…

It seems like they mostly say that because the fact that the kit you’ll get from the kickstarter wont have any software except Doom 3 BFG. They say that the developer kit (the actual hardware) will be a “nice finished product” which do sound a little vague but I guess it cant be that bad. He also says that they will work on making a more polished consumer version though.

Source:

I will also wait one generation.

I don’t understand if you’re just trolling with all your posts or not.

Exactly, and this piece of kit will make people really feel like they’re IN the game, instead of watching it from the outside. It is stunning and could lead to nausea, though. But I’m pretty sure people will eventually love it, and use it to get ‘away from it all’.

Also, the gentleman in charge of the project is specifically trying to make an open system that anyone can use for anything.

It’d be great to see an implementation of the Rift SDK implemented in BGE. The Rift itself is just a low-latency display with magnetometers/accelerometers/gyros built in - the SDK apparently does the heavy lifting - so you’d need to implement the head-tracking for the camera, plus a stereoscopic camera system with whatever visual convolution you need to work with the optics.

I suspect the second thing would be easier than the first.

This is solved by having lenses between your eyes and the screen, which make the real focal point at a comfortable distance from your eyes. These lenses are also the thing that allows the screen to appear as though it is wrapping around your head.

This thing is incredible.

If you look at this video, you see only a simple 3D interior with still simpler garden around

But with the Oculus Rift :

First time I’ve spent several minutes looking at minute details of the leaves, the books on the desk, etc…

On a simple PC screen, you see house, trees, etc… On this, you see big houses, tall trees: it’s incredible the feeling of heights of the objects, how big the things are.

For a blender artist, exposing his work through this Oculus Rift is really rewarding! This is a delight for the eye and highlights all the works made by the 3D modelers.

There’s also the issue of motion sickness due to the latency between your head movements and the position of the camera in the scene, but I read that it will be solved completely for the next version.

There’s also the issue of resolution and how it might seem too low to make it comfortable to look at, but I also hear that resolution will probably improve as well.

Also, in general, I like the concept of the Oculus VR, it looks like that we’re finally getting virtual reality technology into a position where it can hit the mainstream, though I think I have seen an article where the field of view stops at some point looking downward so you can see the buttons on the controller or whatever device you’re using to play the simulation (talk about killing the alternate reality aspect by letting you see the real world at any notice).

When it comes to implementing the stereoscopic code needed for the BGE before it can be used for the Oculus, Benoit Bolsee has taken the BGE in a step toward that direction with this commit concerning support for 3D TV’s (which one would think would at least get the BGE partway there to full support).

A fair few people will be put off the issue of motion sickness.

Imagine using the Oculus to position yourself “inside” a Blender modeling session… Using 3D mouse or VR glove to do the editing. Thats in my opinion where the real attractiveness of this new technology lies for Blender users. Ive been wondering for years now why theres no support for using 3D monitors for visualizing three dimensions inside the Blender viewports, enabling better distinction of depth which helps when editing some complicated geometry. Considered coding this myself but at the moment theres more pressing matters.

Yes, a true depth perception is what Oculus Rift can provde for 3D modelers and sculptors. It would be damn awesome to have some kind of OC support for sculpting at least.

Good news: from a programmers point of view, OC visualisation and navigation of the 3D View would work just the same for modeling as for sculpting.

It looks to me like Oculus has been doing a pretty super job throughout development at fixing many of the inherent problems with VR (latency, 1:1 mapping between head motion and virtual motion, depth perception), but I still doubt that it’s actually going to catch on as a meaningful part of people’s workflow. Maybe I’m wrong, but it actually seems more likely to me for something like CastAR to be used in real practical work applications, if only because it’s less intrusive, but that still doesn’t strike me as very probable. I think it’s less a technological barrier and more an inherent issue with blinding yourself to the outside world just for some depth perception.

I think it’ll work well in some areas and other areas not so well. I know it’s not exactly the same but look at the results of Google Glass, albeit more AR than VR.

As for manipulating virtual things in mid-air such as with LEAP, it simply sucks and it’s just not practical for various reasons including gorilla arm and lack of tactile feedback. In terms of producitivity, it fails to make things more intuitive and actually tends to create more inefficiency; it works best as a brief novelty item. A simple example is, to move something you need to move your entire arm versus twitching the mouse; not to mention, you lose precision and accuracy.

Building on that idea, as for the AR/VR presentation of 3D things, I’d rather use my mouse to rotate my view than rotate my entire head, again because of the accuracy issue. Idk, maybe the OR would help with streching your neck lol. It’s hard to beat the dexterity of our hands and our fine motor skills which is why the mouse remains king.

With gaming, it works well where the view is static such as a cockpit where, where you look does not influence your movement. Idk about an FPS. I mean in real life, when people shoot they line up the weapon with their line of sight and/or maintain that alignment as they move. Now with the OR, how it would play out is you’d either use your head to target, or use your head to rotate your view and use the mouse to move the imaginary crosshair on the screen i.e. your virtual gun. I guess this works if you want to make the game closer to real life or more difficult? This would turn into mouse/kb only or rift only servers, since the mouse/kb allows for superhuman movements. Also people are physically lazy so if a game requires physical movement, that might be a deterrent.

I think the OR will fair well in the entertainment arena though since it’s visual escapism. As for the motion sickness, I’ve heard some people get used to it and some don’t.

It’s not to say the technology will never amount to anything, but IMHO it still isn’t mature enough for mass appeal.

This was an interesting read:

I suppose I should clarify my point, because I actually still don’t think that augmented reality will catch on that hard for technical work, at least not anytime soon. My thinking is that, while the displays are relatively inexpensive, I haven’t seen an equivalent non-intrusive input system that would really take advantage of the extra degrees of visual fidelity. If 3D monitors catch on, I can still imagine that working as a short of shadowbox system with the 2D mouse tracking on a very clear 2D plane, but none of the 3D systems seem to offer the same comfort, precision, and ease of use as a mouse. Also, once the 3D system starts tracking to the user’s head and loses its borders, it seems harder to imagine a simple 2D input tracking very well, either because the user would be capable of crossing the cursor’s plane of movement, or because the cursor’s plane would track to the user’s head and force a stationary posture.

Maybe that’ll change; I think Apple has proven that a trackpad can, in some cases, be a superior primary input device, and it looks like Valve has done something impressive things with their new controller, which claims to offer the same precision as a mouse in a gamepad form factor (so far 3rd parties agree), but these are all, still, 2D input systems.

I dunno, give it 20 years and I’ll tell you how I feel about it then. 3D display hasn’t made many revolutionary improvements since Dial M for Murder, but it has come a long way.