You get mipmapping yes, but I’ve never seen any changes in memory use last time I tested that. As far as I can tell, Cycles loads all tiles in the map at render startup and makes no attempt to flush them. Plus as noted, any speed benefit is lost from having to use the OSL backend

If cycles would directly support TX that could possibly eliminate the speed slowdown? Otherwise having to use OSL for it seems kinda crippling.

I’m not trying to start a render feud. I want to compare them so I’m well informed. That’s all ![]() Knowing strengths and weaknesses leads to better planning and decision-making.

Knowing strengths and weaknesses leads to better planning and decision-making.

it works but only with non packed image…

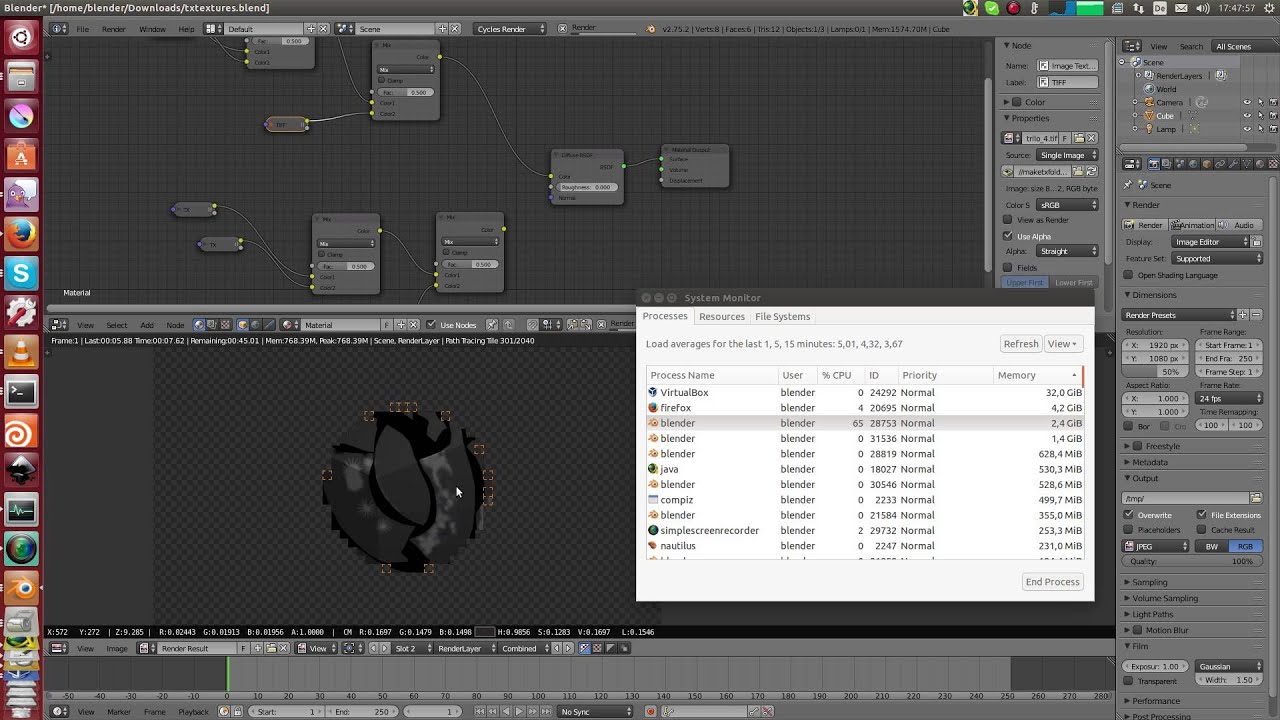

heres a short video (3 8k textures svm vs osl)

@ marcoG_ita the memory consumption is really low - couple of mbs

Thanks, it’s awesome and sad at the same time  (forcing OSL use)

(forcing OSL use)

Anyone knows why for Gooseberry such way of shrinking memory usage has not been tried to be implemented for SVM? (is it possible at all?)

i’ts not that bad with a few if’s you can bring a lot of speed back!

with osl you can optimize your shader much better then with svm also osl can handle very large shader better/without problems (svm can’t handle my lego shader e.g.)

of course more speed would be better maybe an oiio/osl update would help here (juicyfruit :)?)

Ok, I guess I screwed up my previous tests with OSL somehow. It does work. But…OSL…

Coming from Maya i’m going to jump straight into Renderman muwahaha. It’s the orgasm of animation rendering. Well, at least it used to be

I’m also wondering why the gooseberry team never tried implementing the TX format for memory savings. It seems like a no-brainer and possibly trivial, considering the support is already there for the most part.

Conceivably Cycles could take all textures (including packed textures) and convert them to TX format for internal use before rendering. And Cycles could be made to use TX without requiring OSL?

As I understand it, SVM lacks the functionality find out what tiles/mipmaps it needs and doesn’t store textures in a way that lets tiles be loaded individually. And this functionality would need to be custom-coded by the Cycles devs and isn’t exactly trivial (or so DingTo tells me when I hassle him about it  ).

).

The way Arnold handles it is with an option to look for a .tx version in the same folder as the specified texture. So you make your .png or .exr version or whatever, specify that file in your shader like usual, then run maketx to cache it. Then enable that option and instead of the specified .png or whatever, it looks for a file with the same name but with the .tx extension and loads that instead. That way you can convert a scene to use cached textures just by enabling that flag. (it’s fairly simple to make a script or tool that sweeps through every image in a project and runs maketx on all of them).

Maketx is a utility that’s part of OpenImageIO, btw. Not sure if that was ever answered earlier in the thread. .tx isn’t the only format used for this type of thing, mental ray has it’s own .map format, for example. .tx is just a format OIIO has built-in support for doing this with. And since Cycles already uses OIIO to handle images from disk, it makes sense to use it.

As for why SVM tilecache was never done for Gooseberry, I really have no idea. I wasn’t surprised at all to see the team run into issues with memory use and slow preview responsiveness due to the texture handler. (and tbh, I didn’t recall it working that well with OSL either, but it seems it does). Maybe coding it IS more complicated than all of us realize. Who knows?

It’s good to have good quality options to choose from and renderman certainly has advantages over cycles, true displacement mapping being one of my favorites but I am missing an important point in this conversation: renderman is not free. Yes I know, it is free for non-commercial use, but for small commercial projects this might carry weight. Also, renderman works perfectly on its own, both on linux and windows but the the add-on still needs a lot of work. It’ll get there I’m sure, but for the moment it is not yet a real option for serious work: for example hair doesn’t seem to work (although this might be my lack of renderman experience).

Also, renderman does not support GPU rendering, so Cycles clearly has the advantage here (actually Pixar states in item 31 of their Q&A: “As the technology matures for comprehensive production usage we will reveal more in future.” Come on guys, GPU computing is not yet mature? Welcome to 2015

Anyway, like some previous commenters said: use what works for you. I myself am happy to have both options now :eyebrowlift2:

CUDA/OpenCL computing on the GPU can do a lot of things now, but it’s not yet capable of instructions that even come close to what the CPU can handle (just look at the struggle to get more complex shaders such as SSS working without massive slowdowns).

With new technology that would allow GPU’s to make use of standard system RAM for rendering instead of just the onboard memory, the architectural limitations are the only major thing that is left. Then it’s a matter of reducing the heat and power output so people won’t need to install expensive water-cooling systems on top of the standard fans.

@ace_dragon: good points of course, especially the ram limitation, but Gpu computing is already quite mature, and the ALU capabilities of the latest architectures are increasing rapidly. Anyway, it is just one of the aspects to consider :eyebrowlift2: and certainly something where Cycles is at the forefront (think the latest amd support).

Renderman has dat denoise button tho.

I was playing with it yesterday, the denoiser literally reduces/removes at least half of the noise or more in any given render at a tiny tiny cost to accuracy.

How is it doing that? Could Cycles add a denoise feature?

I’ve implemented a simple median filter as a post process step. At high resolutions it works amazingly well. I’d even suggest increasing the size of your render, applying the filter, then reducing the image size to what you intended. That works even better.

The PRMan denoiser is more complicated and, if I understand correctly, takes into account underlying geometry. It tries to separate the lighting variation from the actual surface variation and remove noise from the lighting portion before recombining them. My personal opinion is that while it may lead to slightly more accurate denoising the procedure I described is almost as good and much simpler.

Can such a thing be done with the compositor, because I render animations, I can wait on stills.

You can’t do it from the compositor but I wrote a small java program that will median filter all .png images in the same folder. I don’t see how to add it as an attachment here. Anyone know how to do this?

I’d love to see median filter added as a compositor node.

Could you put it on filedropper for me real quick.

http://www.filedropper.com/

Also could I get a basic rundown of how to use it?

I don’t have a filedropper account. Here’s a link but it won’t be live for long (sorry future forum readers) https://www.dropbox.com/s/shldv3fgibwyryr/medianFilterAllPngs.jar?dl=0

To use it you just put it in the same directory as a bunch of .png frames and double click it. It will create denoised images and leave your originals untouched.

So my typical workflow is to double or quadruple the image size in Blender (which will increase the number of pixels by x4 or x16). Then you would reduce the number of samples by that amount (x4, x16 or whatever). That gives you the same number of samples for the image but you gain information about “subpixels”. Render all those frames into a folder. Copy the java program into that folder. Run the java program with a double click. Create a new Blender file with compositor only. Read in all the large, filtered frames and scale them down to the original size you wanted.

This sounds like a lot of steps, but I have it somewhat automated for my project and it saves tons of time and money.