Hey guys, remember when this thread was about a render engine?

Nope. What render engine? ![]()

I think that my almost hilariously curmudgeonly posts have been somewhat misunderstood. I don’t specifically want a movie that looks like a moving Van Gogh, I just want something different. Making something avant garde that is also accessible is the challenge. That’s what they’re getting paid for. By reconstituting the same stuff, they are backing down from the challenge.

And as I said, I appreciate the pull of success. Frozen did over $1 billion worldwide and over $10 billion in merchandise. But so did Transformers 2, 3, and 4. To argue that Frozen deserves respect simply because of its success would be to argue the same thing for Transformers, and those films were, in some cases, not just bad but detestable. In the broadest sense, Transformers was art, but in most conceptions of something that would be described as art, it is not, and people ate it up.

That’s a really good point and something to which I have given a great deal of thought. I hated that Frozen succeeded and also loved it, because so many people behind the scenes poured their heart and souls into the project, and the crushing disappointment of having a film fail must be overwhelming.

The problem is, thinking about those people just pisses me off more. The beautiful animation, the specific scenes, all of the details are the work of great artists toiling away in Disney. Just as with the special effects in Transformers, my criticism does not fall on them. It falls on the overarching management of the film and the philosophy that it espouses. So, from that perspective, their great art is being used for an unworthy end.

I am likewise pissed me off because those toiling artists are frequently overworked and underpaid. You don’t need to travel far to find tales of woe from animators and special effects artists. I want the artists to succeed, but I don’t want the executives, the broader company, even at times the directors and writers, to succeed. I’m completely aware that this makes no sense.

And also, for the record, so I don’t seem like an absolutely crazy old jerk, I loved Wreck-It Ralph. I admit that much of that may have come from pure nostalgia for my childhood, but I don’t care. I still get all weepy when he’s plunging toward the volcano.

Now while it is indeed true that the conditions of the artists might be deplorable, you are aware that if the artists and writers get all of the revenue, the company that hires them wouldn’t be able to stay afloat, right? I don’t find any disagreement that the artists could be paid more simply because of the incredible amount of skill required to hold such a job (and rising, not at all like the fast food industry where the requirements are actually dropping).

Hey guys, remember when this thread was about a render engine?

Well, one of the points brought up is whether the engine’s performance and quality would hold up when used for photoreal VFX, arch-vis and the like, especially when compared to engines like Arnold and Weta’s Manuka.

It’s still a unidirectional path tracer with BDSFs and all the other physically correct acronyms you can through at it so why wouldn’t.

Wreck-it-Ralph and the upcoming Big Hero 6 (which looks gorgeous btw) shows that Disney is willing to forgo just making princess movies. It seems like they will be doing a tick-tock kind of thing where movies that may appeal more to boys (such as Wreck-it-Ralph ) gets released during the fall then a movie that appeals to girls gets the next year at around the same time.

Reply to orginal topic…

From what i understand, their render is more like pathtracing, not the most advanced form of rendering.

On the otherhand, Lucas (the guy from adaptive sampling) is also working on metropolis render.

Its a method that optimizes the ray solving cash.

People should really be wary about about all these “advanced” methods coming out of universities. These people have no real-world constraints, they rarely do honest benchmarks and often enough they don’t even publish their implementation. Dealing with this stuff can be a huge waste of time.

The Metropolis algorithm is just another mathematical method (not related to rendering in itself), which is applied to stochastic rendering (like path-tracing), because we can. Once applied, you have to find some contrived scenes that demonstrate that the implementation has some benefit. One such scene is the ubiquitous “caustics pool”. Such a scene could be rendered more efficiently by a method that is rooted in the actual problem, not abstract mathematics. On the downside, your renderer is now more complicated, harder to understand and harder to maintain/optimize (especially for people without a PHD in math). It’s also harder to use and has different artifacts (have you ever tried rendering an animation with Metropolis sampling?).

That’s the reason why renderers such as Arnold are not implementing those methods. If an actual company like Disney implements something for production, you can tell it has some real-world impact for them. For the people rendering their Stanford Bunnies at the universities, that’s certainly not the case.

The MLT that Lukas has worked actually does provide some excellent speedups for scenes with more difficult lightpaths (mainly areas dominated by indirect light, volumes, and shiny surfaces). The specific algorithms are also a bit more advanced than the original ones, that specific one borrowed from the SmallLux project (it’s come a long way since the Veach thesis).

Things in this department should see a lot more benefit once he figures out how to make it work adaptively (which the fact it would be built on top of adaptive sampling is probably why the latter is being worked on first in its own patch). However, I wouldn’t hold my breath yet as that is a very difficult problem to solve.

He mentions specifically that SmallLux’s version worked a lot better for how Cycles is designed than the original one used in the PBRT project.

In short, the new methods work better for tiled rendering and produce a lot less fireflies.

Update: http://www.blendernation.com/2015/08/06/non-blender-disneys-hyperion-renderer/

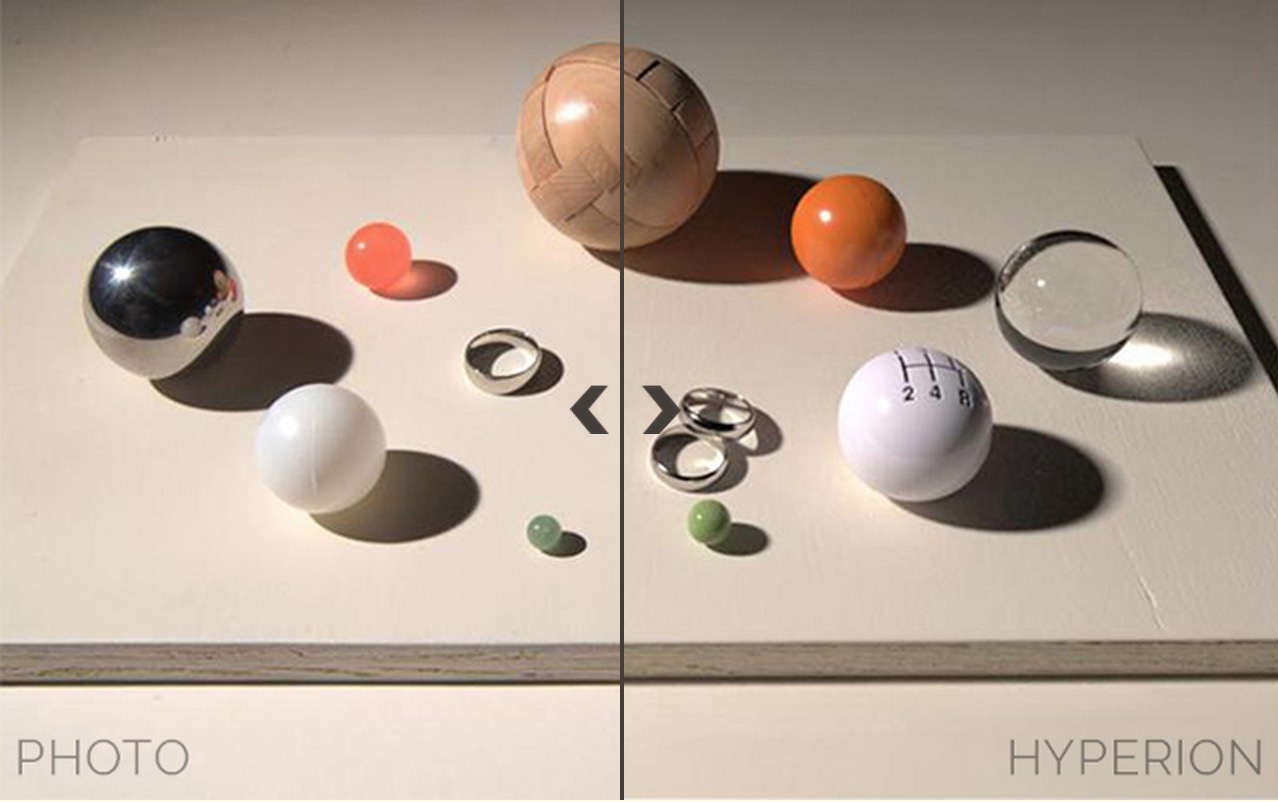

This image looks like a pure photo.

I wonder what innovations could it bring to achieve a result like that. Also what about the render times.

One of the most interesting is the caching of the rays as the video shows. Instead of calculating each ray by brute force anew, they are calculated and stored in some sort of index instead. Hopefully a big percentage of the calculations can be re-used like that.

This reminds me back in the old days when calculating Sin and Cos was too expensive and programmer created such tables to speed up process.

Do you have any ideas about squeeze some optimizations to Cycles?

Note that if the math is right, the tonemapping is the same and the shaders are comparable, all path tracers (like Hyperion and Cycles) will produce the more or less the same result (edge cases notwithstanding).

One of the most interesting is the caching of the rays as the video shows. Instead of calculating each ray by brute force anew, they are calculated and stored in some sort of index instead. Hopefully a big percentage of the calculations can be re-used like that.

It’s not re-using the rays, it’s re-ordering them so that they are more coherent. This only makes sense when the cost for sorting is lower than the cost of incoherent rays. It’s also deferring the evaluation of the shaders so that texture accesses are coherent.

In their example, this really pays off because incoherent texture reads are very expensive. A problem I have with the paper is that they seem to only test the Ptex library, which is notorious for scaling issues (Arnold developers have complained about this). The render time without textures was actually the more or less the same as Arnold, so for geometry reordering doesn’t seem to pay off. Without testing normal textures, there’s no way to tell if it’s worth it otherwise.

Another way they find a great payoff is where they limit their machine to 16GB memory and thereby force an out-of-core rendering situation (the whole scene takes over 40GB of memory). Again, that’s a pretty contrived example. What you want to do is have 64GB of RAM (quite cheap these days) and tell your artists to stay inside that limit.

Exactly - this ray reordering etc. has nothing to do with realism, it’s just an optimization that helps for massive scenes.

Also, regarding Cycles: It should be mentioned that the kind of optimization used in Hyperion really only helps for massive scenes, for simpler ones the sorting and I/O overhead eats away the few percent of speedup gained by the coherence. So, considering the target of Cycles (being a production renderer for smaller studios IIRC), this kind of optimization is not really what it needs. Of course, it’s still pretty cool ![]()

I picked one ofthese up with 128 gig of ram and a 2.4 ghz processor for $200, and did an hour on the benchmark from gooseberry And yes, is there better hardware out there. Yes. Is it used. Yes. But that is 200 dollars for 24 physical cores, So yes. 64 gig of ram is very cheep any more.

Hope it’s fine to bump this, I was searching for a Cycles vs Hyperion comparison and landed here.

From my limited understanding so far, both engines should achieve pretty much the same visual quality. The problem with Cycles is of course, the monster render times it employs! I understand that Disney’s developers figured out a way around that in their raytracer? If so I’m hoping to understand its functionality better! I doubt a company like Disney would share source code with outsiders, though I generally hope that one way or another Cycles finds a way to break the chains too.

In any case, some form of comparison would be nice to see. I’d like to know better what the differences and speedups are, even if just out of plain curiosity.

The other thing about Hyperion that sets it apart from most consumer engines (free and commercial) is the fact that detail and the number of polygons in a scene is a complete non-issue. Zootopia for instance had scenes with many billions of unique polygons with no memory or speed issues whatsoever.

The only other engine that comes close to having caching like that is Arnold, now it is true that Cycles may have caching in the future with Mai Lavelle’s ongoing work on the displacement code, but it’s probable it may not be to that point (at least in the initial iteration).

Path tracing engines are a trade off between speed and scene complexity. Hyperion is used by Disney, a major studio with the people and skill to create the kinds of massive scenes that it was designed for. Feed Hyperion a Cornell box or a less complex scene and Cycles would probably beat it hands down speed wise, and not by a small amount either. Cycles is intended as an efficient renderer for small studios. As a result it has features and optimizations that prioritize access speed over complexity. Feed it a scene from Zootopia and Cycles wouldn’t even be able to render it.

Two different engines designed for two very different uses. You can’t really compare them to each other at all.

Anyway … i believe its possible to emulate it.

Though it might be a lot of work to set it up, it might also be interesting if it indeed would decrease time and be faster.

instead of a bundle of light, reduce the image size so a single “virtual” photon gets an effect over a wider area (like multiple beams).

so for example start low res renders of 64x48, 128x96, 256x192, 512x384 1028x768

in the end scale all required images so they match 1028x768, an blend the images together again.

one might need to alter seed value for best results.

I wonder if it would be possible for Cycles to intelligently determine the size and complexity of the scene and enable/disable features like ray bundling to create the optimal situation for each case.

I know that the seeds of such a thing has been worked on in the form of feature-adaptive kernels, but this would go a step further in that it’s not just looking at what shading/rendering features are being used.