Great!

Thank you very much for your help to get it to work.

I tested serialized exporter, seems to work great. But instances makes it crash - they are exporter as 2 shapes with same ID so mitsuba crash.

Workaround (dirty) would be using object ID instead Mesh ID - since they are different for instances.

Also in line:

create shape xml

self.openElement('shape', { 'id' : translate_id(obj.data.name) + "-mesh_" + <b>str(i)</b>, 'type' : 'serialized'})

str(i) - seems to be always 0. Maybe it supposed to be str(self.mesh_index) ??

In general it would be best to use mituba instances for it.

Yes, I noticed it crashes with instances today while testing more complex scenes. I am looking to fix this. Maybe using the workaround first and use mitsuba instances after some more code. First I want to debug the serializer, looks like it crashes with some complex surfaces but I’m not sure.

str(i) is 0 when there is only one material assigned to the surface, if you have more than one material assigned to different faces, str(i) is the id of each set of faces.

EDIT: Got it, the crash on serializer is caused when exporting UV.

Ah that is why probably car scene was crashing for me too.

Btw. Luxrender team added support (in latest builds) to Irawan&Marschner woven cloth - it was copied from mitsuba irawan plugin. So maybe we should copy theirs exporter settings, for cloth, to ours? ![]() Kind of funny if you ask me.

Kind of funny if you ask me.

Hi All,

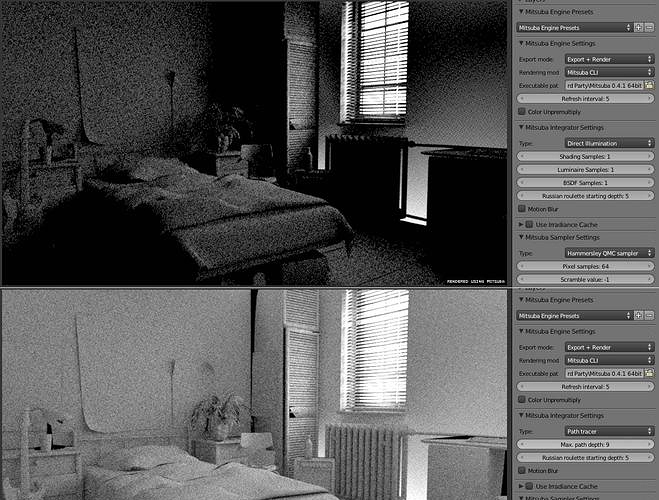

I conducted a few tests on a moderate detail scene. This bedroom scene has 220,000 faces. The only light I used was a single HEMI light as a constant white background source.

The path tracing algorithms, including Direct Illumination, seem to produce this unnatural glow behind the radiator. The Volumetric Path Tracer seemed to produce the clearest image in the least amount of time. But there is still that glow problem behind the radiator. I did check the normals on the mesh and they are all pointing inwards to the room. The Photon Mapping is a close second but I am not sure how to use it in a second pass (like Renderman Style)? The splotchyness of the photon map makes it unusable for a final render.

These two algorithms seem to get the closest to what the scene should look like, if you ignore the noise of course.

Overall I am wondering how to reduce noise. The time to render the above set of images ranged in 1-3 minutes per frame. While 1-3 minutes per frame might be an acceptable time for animation, the quality is not. I am wondering if there is a way to boost the quality, reduce the noise and still keep render times in the 1-3 minutes per frame?

using irradiance cache can help clear up noise quicker with the pathtracing algorithms, but if you set the quality level too low you’ll get low-frequency noise in an animation, from what i understand. i haven’t rendered any animations using irradiance caching, so i can’t speak from experience, but this is what i’ve heard from everyone who’s tried, or has worked on development of renderers with irradiance caching.

About speedup - best solution would be using area lamp inside room, but JW refused to make transparent area lights , since this is physically incorrect. But maybe if you ask him (again), he will change mind.

Do you have glass in window - if yes, remove it or replace with thin dielectric. Also make color of objects 60% white ( it will reduce number of light bounces).

From my knowledge, for this scene PM or Bdir should work best (with Irradiance caching - it will remove lilght blobs from PM).

About light leak - maybe try add thickness to wall - with shell modifier.

Also you may try using point light as outdoor light - it uses ‘sphere’ primitive, so it should give less noise (at lest from rectangular area light; I’m not sure about constant envrio light). Just set it size to something big, maybe it will work…

Thanks for the tips.

I have placed area lights over the windows, which have no glass material at all only empty faces, and removed the HEMI light from the scene. The HEMI is what seems to produce the ‘light leak’ but only in path tracing modes. The bi-directional and particle tracer seem to think the geometry is fine. So I think the HEMI light might be worth revisiting by the developers to check for programming bugs.

Here is the scene lit by Direct Illumination @ 1024 samples (which too long at 7:21).

So you can see this scene is in bad need of some kind of ambient lighting. This is why it would be nice if the HEMI actually worked. I did try adding in the irradiance cache but it produces smudgy results.

While remaining in direct lighting mode is there any way to add ambient lighting to this scene?

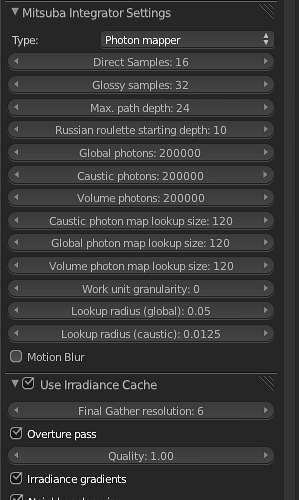

try Photon Mapper, settings loosely based on reading in Manual and Internet:

in pathtracer i’ve noticed sometimes speed increase if set Max. path depth to -1 (infinite), as strangely as it sounds ;-). Sobol Sampler is a try worth.

Energy distribution also gives me nice results. but is a bit slower than path or photon.

btw, testing Render-Settings is way faster directly in Mitsuba app (Render Settings from mitsuba in Blender are anyway not saved)

There should be no difference for this scene in lightning between area light and hemi light (area light must cove whole window).

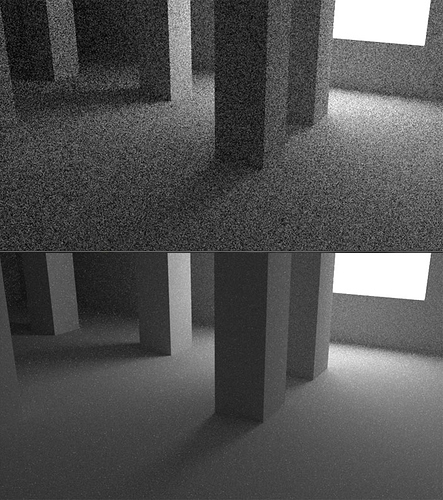

I did test 1 minute render - upper hemi, lower is area light (path tracing) (AMD 3core, 2,6Ghz):

About light leak, it only happens for me if wall has 0 thickness. Maybe it you add thicknes to wall and floor, and scale floor so it go past walls it will fix it…

But if you are sure it is bug, you could maybe simplify it and report to Wenzel Jackob - https://www.mitsuba-renderer.org/tracker/projects/mtsblend

@bashi: Thanks for the settings, I will try them out on another render.

@Jose:The problem exterior wall is essentially a plane, but aren’t all faces 0 thickness? The other walls are constructed the same way but had no problem.

I had this render already cooking. I tried out bi-directional with a Max path depth of 3 and and Independent sampler using 2,048 samples. This render took a little over 2 hours to complete on my 3.5Ghz AMD quad core. This is the kind of quality I would like present. But at 2 hours a frame who has time? This is essentially a clay render as well. What will happen to render time when I actually start playing with materials?

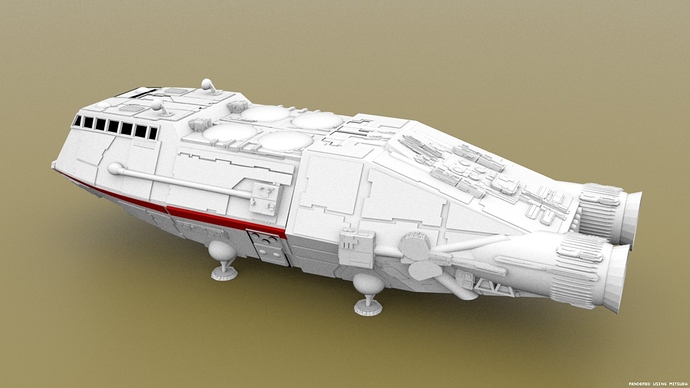

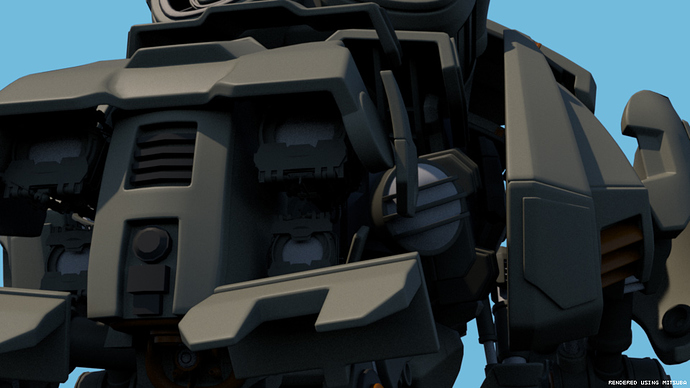

Here are a couple of models from Blendswap I rendered in Mitsuba using the Blender exporter.

Attachments

I noticed that the quad bot from Tears of Steel was posted on Blendswap.

When I tried to render it there were a couple of problems in the Mitsuba Exporter that prevented it from rendering.

1.) If the camera is not on a layer that is selected the exporter fails with a ‘no sensor’ error.

It might be worth revisiting the isRenderable list and making an exception for the camera type. For instance if the object is a camera and is the active camera it should be added to the list of objects to include in the scene even if it is on a layer that is not visible. I simply moved the camera to a visible layer

2.) The model makes use of instances on the toes so this produced a duplicate ID error for shape names upon export. A simple fix is to use the object name instead of the datablock name as suggested above.

In export/init.py ~line #812 (inside exportMesh).

Change

self.openElement('shape', { 'id' : translate_id(obj.data.name) + "-mesh_" + str(i), 'type' : 'serialized'})

To

self.openElement('shape', { 'id' : translate_id(obj.name) + "-mesh_" + str(i), 'type' : 'serialized'})

With both these fixes in place I was able to render the scene.

Cool renders!

Thanks for the bug reports. Will be fixed on next release.

One question for users of the addon. Right now the scene is created in a loop that goes through every object in the scene one after another. The result is a mix of all objects as they are on the list (camera, light, material, mesh, material, mesh, lamp, camera, …).

I have been thinking on running several loops instead of one and create a sorted scene file (all cameras, all lights, all materials, all meshes). What do you think? Would you like this change?

Funny you should mention that I was just wondering if Mitsuba was ‘smart’ enough to realize it had already loaded the same serialized file and did not have to reload again when searching for a new asset. I think the answer to that question might affect how to proceed. If Mitsuba can re-fetch an asset from an already referenced serialized file then perhaps you should leave all the objects in a single giant file. But if there is performance hit each time it has to load a big serialized file just to search for a single mesh then a distributed approach might be the way to go.

Dividing them up by object type sounds like a good compromise as well. You might want to add particles as their own serialized file too. I started playing around with supporting object based particles. This is what got me thinking along these lines. Even with instances, defining 1,000-10,000 particles in XML can take a while to process (read and write).

I don’t know if you have an official place for feature requests but one thing that would be nice would be to add one more option to Export Mode. Currently we have Export Only and Export+Render. It might be nice to also have Render Only. For cases when you have a large scene and you don’t want to wait for the same exact scene to export again. Should auto degrade to Export+Render during animation.

I was talking about the xml scene file, not the serialized file, in case anyone wanted to later edit or change anything on the xml scene file it could be better if the xml file had comments and a structure instead of having everything unsorted.

The serialized file is a very simple format for meshes only and I think it should be very fast to load any single mesh from it. I assume Mitsuba caches any mesh already loaded but I am not sure if it loads all the serialized file at once or only when it is required by a shape node.

Particles (Hair and Volumes) have their own file format. I believe other types of particles need to be written in the xml file as shape instances. Maybe in the future I will try to implement something in Mitsuba to load large amounts of instances as particles or other types of particles in a format more efficient than xml.

I take note of your feature request. Do you know if there is a way to detect if a geometry has been modified from the last time it was exported and need to be rewritten?

Code for this is in luxblend exporter -In render panel settings, It has check-box, ‘partial mesh export’ so if mesh was not modified since last render it is not exported again. This may increase exporting time from blender. Since mitsuba is based on luxblend I think you can copy it too.

About sorting xml, I doubt any one would tweak large scenes in notedpad since it is hard to read and maintain. But I guess it is good for developers, to find bugs ![]() So I would give it low priority

So I would give it low priority

@fjuhec: I put together initial support for object based particle systems. The code is a mixture of what I had come up with for the Pixie exporter and your matrix math from the abandoned exportSpaheGroup routine. This first draft does not implement instances, it just makes a new object derived from the particle source object at the particle loc/rot/scale for a given time. It filters for alive and dead particles as well.

NOTE: I did alter exportMesh to accept two new non-required parameters to impelment the particle support.

The init.py can be dropped into the export folder (overwrite after you back up your original) then try out the test scene in the attached ZIP.

The orange cones are particles, the cube is the emitter.

Attachments

mitsuba_particle_support.zip (96.3 KB)

Oh wow, I just noticed this thread – seems like a lot has been happening to the plugin! Awesome

Just a quick comment from my end: if you ever have serious suspicions about there being a bug in the renderer (e.g. invalid output, or something that produces a crash), I’d appreciate if you could post a description of the problem on the bug tracker (https://www.mitsuba-renderer.org/tracker/) along with a copy of the Mitsuba input (i.e. the XML and .serialized files, along with all required resources such as textures). If it’s a problem for you that the scene would be publicly downloadable on the tracker, then an email to me will work as well.

Thanks – keep up the awesome work,

Wenzel