I wrote this proposal up for the Bf-Funboard today and I wondered if anyone had an opinion about it?

Basically the idea is to leverage timing metadata already in Blender, to other places without all the scripting nonsense people have to use as hacks.

Dear Devs,

Can we define all ‘input movie’s’ metadata via VSE usage?

Tracker:

Blender’s ‘Movie Clip’ asset type cannot store multiple start frame and durations, once set per scene it is fixed. It can be iterated for the VSE but not for Nodes.

Materials:

Blender’s Image Texture input must have frame start and offset, set by key-entry of data.

Compositor:

The Image/Movie Input node must similarly have an offset and frame start defined manually.

None of these media inputs types are associated with the exact same media that appears in the VSE.

Suggestion:

Can we define any of these types by a VSE source strip?Once the source strip is scene defined, (in the VSE), it is stored as an array of timing, duration and scene offset values per use. Currently this appears in the Outliner view as an iteration eg. Scene ‘Name’ - my_video.001, my_video.002

It would be helpful to access this “timing / trimming” information (altering frame start/end and offset) at each of the related input types -Movie Clip / Movie Texture / Input Node Image/Movie.

If you don’t want to use the “VSE Defined” version then you would simply not select that asset type from the dropdown of loaded sources.

I believe that all of the metadata is already available in VSE datablocks. What is wrong with simply transferring frame values between datablocks?

How would this break dependencies?

For me the big issue is that all of the data is already available in the datablocks, it just isn’t available anywhere useful. At the moment you simply cannot say “I want to promote a shot in the VSE to compositing” or “I want to send a clip of media to texture a plane in 3D at this exact time”. You have to fiddle around with timing and either guess or write down times from elsewhere. Then you have to do the offset frame dance that often involves banging a head against the keyboard.

Anyway, what do you think? I wonder if there any devs around (like psy-fi or ideasman42) who might give an insightful opinion.

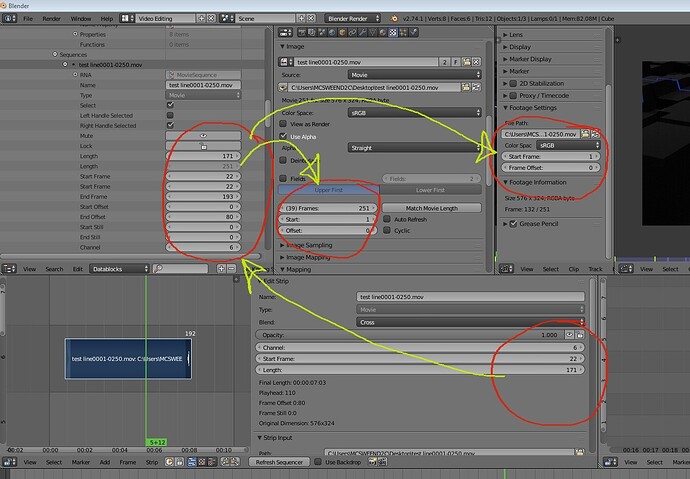

Here’s an example of the problem, all the same source but cannot access the same timing info.