Some years ago, Nicholas Bishop worked on unlimited detail vertex painting, called Ptex, in blender.

It was a great, useful, project, but no one seems to talk about it any more, nor was it ever merged to trunk.

As far as I remember, the reason was, that it was not compatible with the render engines or something like that.

Will this ever be finished? Would sure be cool to have textures, normal maps etc, without even needing UV maps

There’s been noise with it going to be implemented after opensubdiv, because that plays really nice with ptex.

Bishop isn’t working for the bf anymore, so likely someone else will take care of it.

Thanks to the amazing community willing to help and the massive amount of money dropped for Gooseberry they will surely add OpensubD, Ptex, new hair system, new clothes, nodes everywhere, new viewport, parametric modeling, alembic, open vdb, pbr realtime engine bla bla bla and much more!1!!11 Hold on!

The Ptex system will need to learn to scale well in raytracing before it gets implemented in Blender.

It may work fine when multi-threaded in the Renderman environment, but it’s a whole different can of worms than a pathtracing environment.

This is why Solid Angle doesn’t have it working in Arnold, because it will slow the engine down to the speed it would have if using a single core.

So it’s a pretty good idea, but it has a glaring weakness that would ultimately prevent its use in Cycles as of now.

might be useful for texturing workflow if the implementation has the ability to bake from a ptex texture to a uv map.

Kind of like what Z-brush does and mudbox does. it allows you to start texturing before the model is uv unwrapped- focus on quality with no restrictions of the uv and finally after you are happy, uv unwrap and then bake on to the uv.

It is a really nice way to work.

So cycles is not the only place where it can be useful. So screw cycles. get this in so texturing gets even better

Ptex to texturing is kind of what dyntopo is to sculpting.

I believe that there is no way to bake this data in Blender as the internal, and Cycles does not support it. Is this correct?

Hopefully Cycles will have the capabilities in the future to at least (which to be honest is probably enough) bake the data down into a texture map.

But in the end then, you still end up using a UV-mapped texture, which defeats the purpose of using a technology that claims to be ‘the end of UVmaps’ and allows ultra-high detail without the need to first create a massive image map’.

If in the end, you need a 16K or 32K image map to actually have the results nice and fast in a pathtracer, you might as well just go ahead and make the image map (skipping the Ptex step).

That’s a bunch of dishonest baloney, if you ask me. I see no reason it couldn’t be made to work for raytracing or that it would scale badly with multiple threads (I haven’t heard any such complaints from the vray guys, for example). What the guys at Arnold (presumably) did is to use the Ptex implementation meant for editing (hence the bad threading performance due to locking) and hook that into their application. For good performance, you have to create your own implementation that suits your application.

Cycles would do well with a texture caching system that also supports Ptex, alas it’s just a matter of finding someone willing to implement it. Nicholas Bishop already stated that Blender development isn’t feasible for him.

you do… however there is value in doing the art first and then the technical work after in a pipeline.

It is like the idea of sculpting something and after you have it perfect, then going with retopology.

Similarly, if you have ptex textured something, uv-unwrapping it after that would be a more informed process that is affected by how the object/character has been textured.

If you have worked in zbrush or mudbox, you would very well understand the value of that. ![]()

Uv unwrapping is such a buzz kill. When you have dyntopo-sculpted some mesh, you can not just start texturing it - like you would be in zbrush and mudbox.

Instead, blender makes you do all this technical stuff- retopologizing it, uv unwrapping it, etc. By the time you get to texturing, you have lost your inertia that you built up during sculpturing.

By being able to skip all these steps and leave them for the end, I believe that zbrush and mudbox are better artistic tools compared to blender. They let the artist get the vision first and when happy- then do the technical stuff.

Cycles is a whole other story. Some people dont even use it.

Using Ptex in a pipeline like that is useless. It makes way more sense to just use one of the dozen or so smart unwrapping algos out there, or even just unwrap each face individually, and then rebake to a proper UV set at the end. Having to figure out where your highest texel density Ptex patch is and then make an image that can match that fidelity is not only wasting time and effort, but is just asking for problems. Ptex works because you can render with it. Without that ability, it’s kind of useless.

In terms of getting industry standards into Blender/BI/Cycles, I think a more widely used feature (and a lower hanging fruit) is UDIM support.

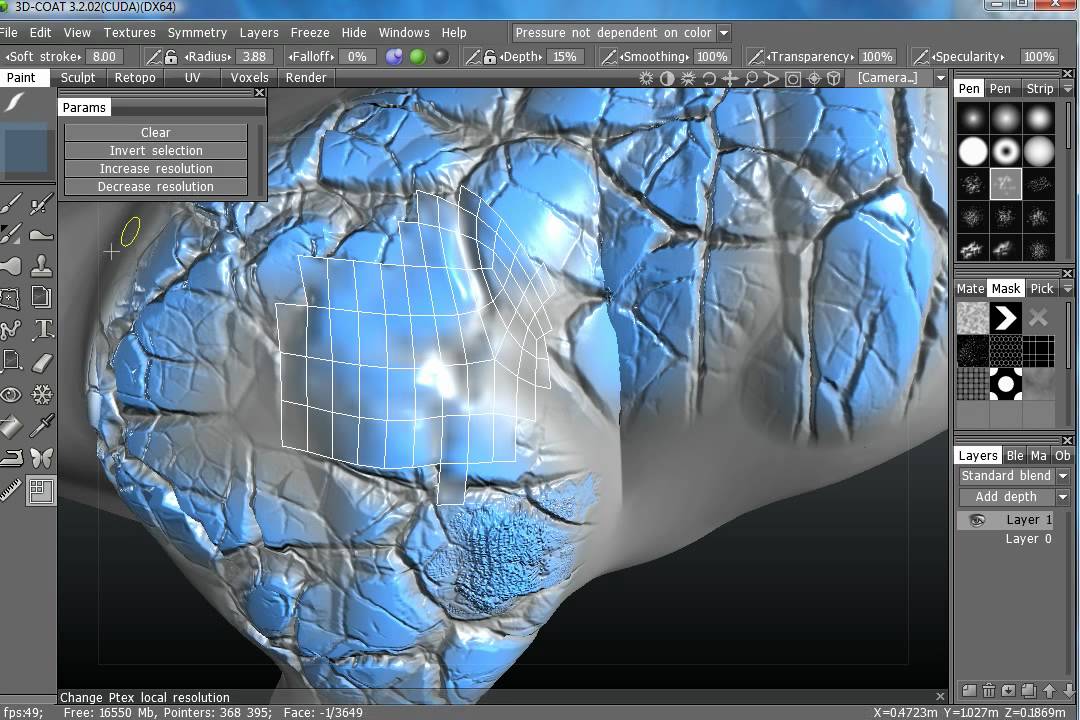

Not only zBrush and Mudbox support Ptex: it’s easy to get started with painting Ptex textures in 3dCoat as well. And then convert to a uv map/texture.

It works fine in Vray so there should be no problems implementing it in Blender/Cycles. Brecht even had a working prototype of Ptex for Cycles on the Bconf some time ago.

Also Ptex really shines when it is working directly in the render engine yes but painting a Ptex texture and baking it to a map later is far from useless as some people here seem to think.

I read the same excuses for opensubdiv. It’s hilarious.

It is not blender that is not ready for awesome new open source pixar technology. No, It is the other way around!

Open subdiv and ptex are not ready for blender yet. We must wait until pixar personally makes them ready for blender…

Ptex would be great to have! I would like to check on it yet I fear I may be too busy with other stuff for the near feature. If anyone is interested in hacking on it you could ask Nicholas or me (he was kind enough to provide me a patch with the latest state of the project).

It is so useless for texturing stuff that it is one of the selling points of mudbox

and 3d coat

Would you stop putting cycles on top of everything else? We can not have X or Y because cycles doesnt support it yet. Why is cycles the first thing that must be implemented for everything? Cycles is at the end of the pipeline. We do not need cycles support in order to enjoy ptex in a pipeline.

3d coat had ptex painting 4 years ago for gods sake.

@Psy-Fi > if you need bug hunters count me in! ![]()

I didn’t say it was useless… I said it was useless for a case where you’re going to have to end up UV unwrapping and rebaking anyway. 3DCoat and MudBox use it as a selling point because 1.) it’s a Sexy New Feature and 2.) most renderers support it now.

Using Ptex in a pipeline where you don’t intend to render with an engine that includes Ptex support is useless. As I said before, the ONLY benefit of using Ptex at all is freedom from UV mapping. In the Ptex workflow each patch can have a different texel density. Trying to some up with some arbitrary way to figure out how that texel density of your highest density patch translates to the amount of space/pixels on a traditional UV map is either going to cause a loss in detail or an overly large texture map.

The toolset required to properly support Ptex within Blender (even just painting) will take quite a bit of work to get into place simply because of what the system is capable of. And considering that neither of the built in renderers that the vast majority of Blender users utilize supports Ptex rendering, I doubt it’s high on the Foundation’s to-do list.

Having used Ptex, UDIM layouts, traditional UV mapping off all kinds, and pretty much everything else under the sun I’d tend to agree with them. Ptex is not the be-all-end-all of texture painting. It avoids the unwrapping step, but comes with its own headaches and issues (just like every other method) like wrangling texel densities, incompatibility even between packages that claim to support it, comparatively slowed render times, inability to do 2D edits, and plenty of others. I get the feeling that most people clamoring for Ptex support ASAP have never actually worked with it in any kind of production and are only going off of the neat tech demos they’ve seen on the internet.

I also think that drawing without UV’s even though you will have to UV map it for rendering anyway, is awesome and comfortable. All about not losing creative momentum till the very end. Making the texture the guide for UV sizes and etc…

Would be really great indeed … assuming performance follows as if it’s the same as trying to vertex paint a sculpt in blender, the pain.

Again, why not just use smart unwrap or mark all edges as seams if you want to use that approach. A hell of a lot easier than setting up ptex IMO.

You’re losing significant texture space on margins that way. The nice thing about ptex is that it can interpolate across polygons.

But yes, assuming you set up your texture to be some 4x the size of your target texture resolution, it’s a feasible approach to use smart unwrap and all seams. I’ve tried it a couple times and it worked well enough. Personally, I prefer just unwrapping the model though. It doesn’t that all that much time anyway, and it’s much friendlier to editing in Photoshop.