Hey all:

This is what I answered in the e-mail:

The concept of UV Pass is pretty easy:

UV Pass stores the information about COORDINATES of input image.

UV as such (not UV Pass) is the way of distorting 2d image on 3d geometry, right? You take flat image and wrap it around 3d objects. When you do it in UV image editor - you simply say which pixels of your image should be displayed on which part of the object.

UV Pass is used to re-create this process in compositing. We are no longer working on 3d geometry, but we have only 2d images. Our render is 2d and UV pass is 2d as well.

To get coordinates of 2d image we need 2 values: X and Y.

Those values are stored in UV Pass. This pass, as any other image has 4 channels: R, G, B and A. We don’t need all of them. We need only two. Information about X coordinates is stored in Red channel and Y in Green channel. You’ll notice that Blue and Alpha of UV-Pass are 1.0 all over the image. Those channels are simply ignored by the calculations.

What do the values of R and G in UV-Pass mean?

Bottom Left corner of the image is considered to have co-ordinates of X:0.0 and Y:0.0.

Top Right corner of the image is considered to have co-ordinates of X:1.0 and Y:1.0.

So say your input image has resolution of 1000 pixels by 500 pixels.

Let’s analyze one single pixel of your UV-Pass. Let’s say that its values are as follows:

R: 0.600

G: 0.200

B: 1.000

A: 1.000

B and A are ignored. Only R any G will be taken into account.

Let’s give each of the pixels of our input image an array of two numbers: X coordinate and Y coordinate. Bottom left corner of it will have coordinates of (0, 0) Top right corner: (999, 499), because our image has resolution of 1000x500 pixels. We start at zero, so we have to end at 999 on X, not 1000 and 499 on Y, not 500. Otherwise we’d get resolution of 1001x501, because pixel (0,0) counts as well.

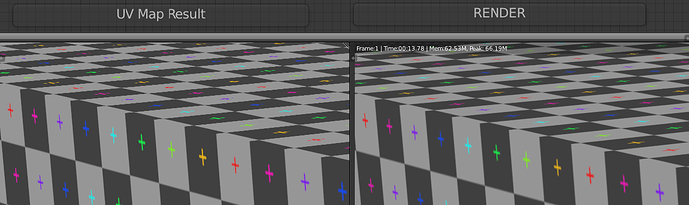

When we plug our image to “Image” input of “Map UV” node and our UV-Pass into “UV” input of it this is what will happen:

One of the pixels of UV-Pass has values as described above (0.600, 0.200, 1.000, 1.000)

Those values tells which pixel of out image should be displayed on this pixel.

So we take 0.600 * 999 = 599.4 and we have X coordinate.

Then we do: 0.200 * 499 = 99.8 and we have Y coordinate.

Those values will probably be rounded, but I’m not sure. Maybe they get interpolated in some smart manner. Anyway. For simplicity sake let’s round them.

We get coordinates of: X: 599 and Y: 100.

This means that on the pixel that we are now analyzing the pixel with co-ordinates of 599, 100 of our input image will be displayed.

Note that values of UV-Pass are relative. When you plug some image that has different resolution than the image you used for unwrapping - you may get results you don’t expect. The resolution as such is maybe not that much important, but the aspect ratio should be maintained.

The other thing we need to take into account is that the way UV-Map node works is not perfect. When distortion is big (surfaces not facing camera) - weird results may occur. I’m not sure if this is blender specific or if it can be fixed without introducing some heavy computation that can substantially increase rendering time.